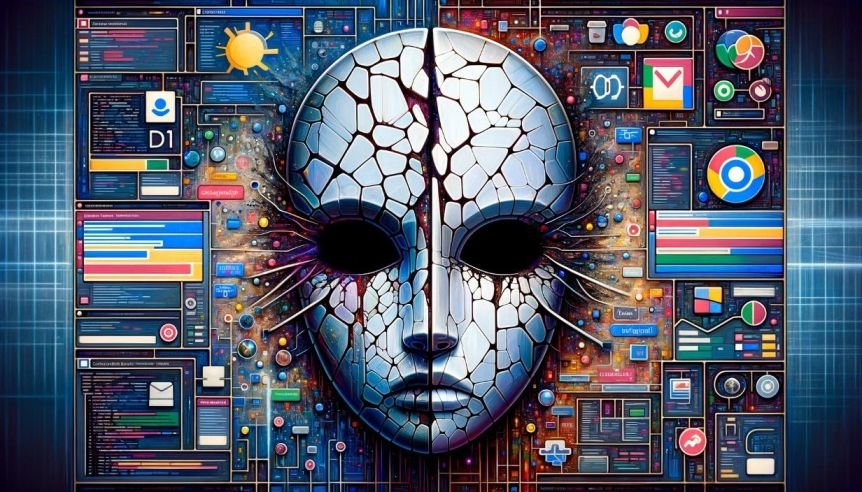

Critical Flaws Exposed in OpenAI’s ChatGPT and Google’s Bard

In the fast-paced and ever-evolving cybersecurity landscape, a new challenge is rapidly gaining prominence: the vulnerability of large language models (LLMs) like OpenAI’s ChatGPT and Google’s Bard to “indirect prompt injection” attacks. This emerging threat, which can manipulate AI systems to perform unintended actions, is sending shockwaves through the cybersecurity community and beyond.

The Growing Threat of Indirect Prompt Injection

Recent experiments have alarmingly shown that it’s possible to manipulate AI-driven chatbots into engaging in deceptive activities, such as posing as scammers. This manipulation is achieved through hidden instructions embedded in seemingly innocuous sources like web pages, which command the AI to act in malicious ways. This type of cyberattack is not just a theoretical concern; it represents a significant and rapidly evolving threat.

Cybersecurity Industry’s Race Against AI Vulnerabilities

As generative AI systems become increasingly integrated into the operations of major corporations and startups alike, the cybersecurity industry is racing to mitigate the potential risks these systems pose. These risks extend beyond just personal data breaches; they threaten corporate security as well. While there’s no single solution to fully safeguard against these threats, implementing standard security practices can significantly minimize the risks.

Experts Weigh In on AI Security Concerns

Leading voices in AI and cybersecurity, including Google’s DeepMind CISO Vijay Bolina, stress the importance of understanding AI’s susceptibility to attacks. The integration of LLMs with the internet and various plug-ins introduces new vulnerabilities, particularly as these systems process increasing amounts of sensitive personal and corporate data.

Understanding Prompt Injection Attacks

Prompt injection attacks come in two forms: direct and indirect. Direct prompt injections involve users intentionally steering LLMs towards harmful outputs. Indirect injections are more insidious, where the harmful instructions come from a third-party source, such as a website or document the AI system accesses.

Nvidia’s principal security architect Rich Harang emphasizes the fundamental risk: the influence of input on LLMs’ output. This influence means that anyone who can feed data into an LLM can potentially manipulate its response.

Real-World Implications and Research

Security researchers have shown how these vulnerabilities could be exploited for data theft, resume manipulation, and remote code execution. These concerns are so pressing that they’ve drawn attention from national cybersecurity agencies, including the UK’s National Cybersecurity Center.

Responses from AI Giants

OpenAI acknowledges the seriousness of prompt injections and is actively researching this area. Similarly, Microsoft is dedicating significant resources to tackle these security issues, focusing on identifying and blocking suspicious inputs.

AI as a Double-Edged Sword

While AI systems introduce new security challenges, they also offer solutions. Google uses specially trained models to identify malicious inputs, and Nvidia has released open-source guardrails to add restrictions to models. However, these are partial solutions, as the full range of potential malicious prompts is unknown.

Best Practices for AI Deployment

To counter these risks, deploying LLMs requires adherence to security industry best practices. This approach involves thoughtful integration of these models into applications and services, considering the source and design of any third-party inputs, like plug-ins.

A New Paradigm in Cybersecurity

The key takeaway for companies looking to implement LLMs is the necessity of establishing trust boundaries. Any LLM that interacts with external inputs should be treated with the same caution as one would approach a random internet user. This perspective is crucial for maintaining robust cybersecurity in the age of advanced AI.

In conclusion, as we embrace the potential of AI-driven technologies, understanding and mitigating their vulnerabilities is paramount. The intersection of AI and cybersecurity presents a complex but navigable challenge, demanding vigilance, innovation, and proactive strategies. Stay updated with the latest in AI and cybersecurity at XpressHack.com, your trusted source for navigating the digital frontier.